You are here: Foswiki>Timing Web>TimingSystemDocumentation>TimingSystemDocuments>TimingSystemRelease>TimingSystemNodesReleaseAsterisk>TimingSystemNodesReleaseR1VETAR2 (06 Nov 2014, DietrichBeck)Edit Attach

VETAR2 (Release R1)

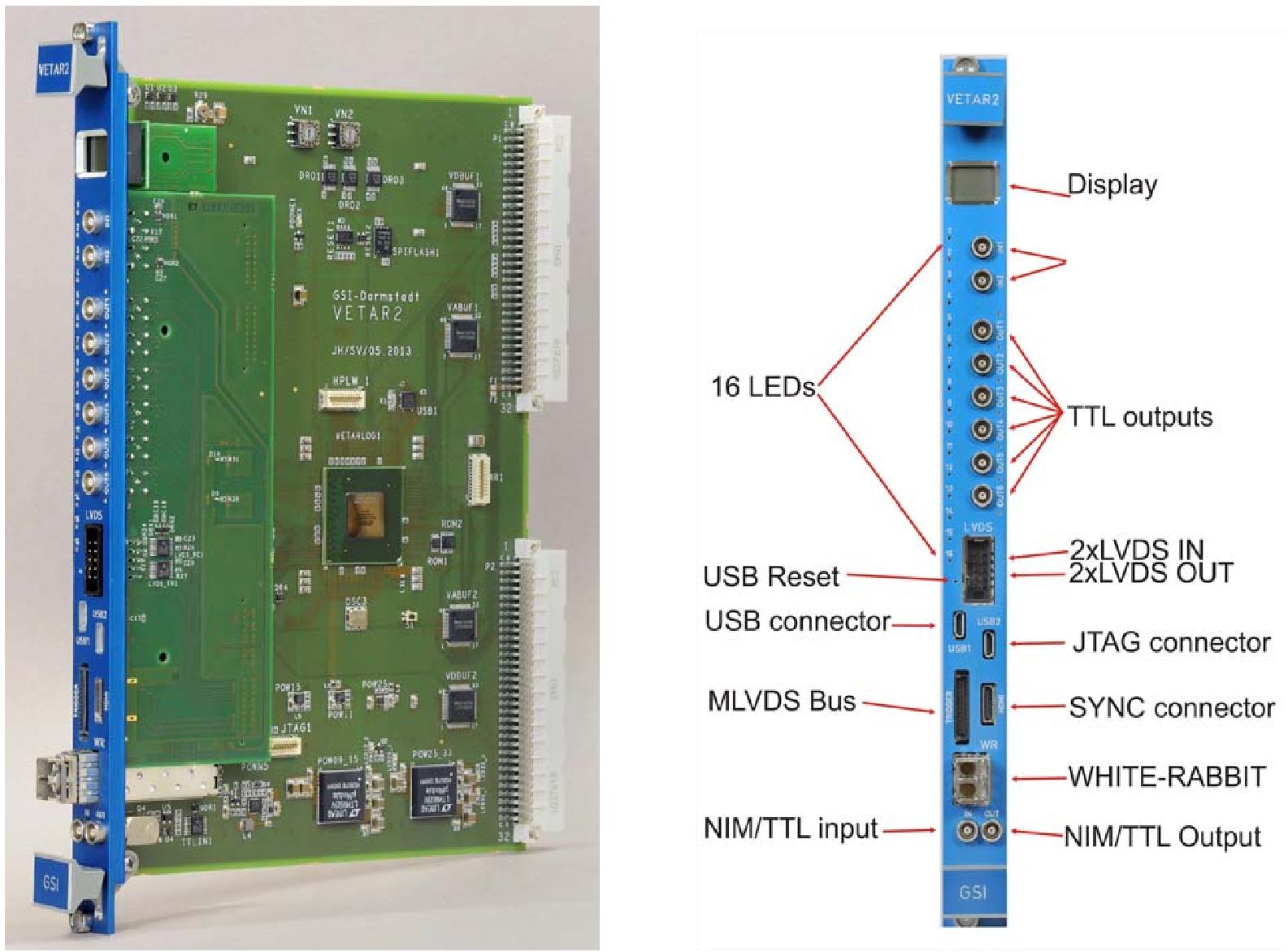

The VETAR2 is a VME carrier board that can be White-Rabbit enabled using the VETAR1DB2 add-on board. I/Os are defined by a mezzanine board.

HARDWARE

VETAR2 is a VME interface board, based on Altera Arria GXII FPGA.Board features:

- ALTERA Aria GX II FPGA with 124K LEs, EP2AGX125EF29C. Information about the Arria Family and pin out of this FPGA.

- SFP connector and cage for White-Rabbit interfacing.

- Two board-to-board connectors.

- 3 x Clock Oscillators 100 MHz, 2 x 125 MHz.

- White-Rabbit (WR) interface connector.

- Trigger connector (8 differential MLVDS).

- USB Interface (USB micro connector).

- JTAG Interface (micro USB connector), auxiliary connector.

- SPI Flash Memory 128 Mb.

- Two LEMO connectors.

- Two one-wire ROMs/EEPROMs.

- 16 x user LEDs

- LCD Graphic Display connector

- Two logic analyzer connector (16 channels)

- Two Rotary Switches for VME address offset.

- User Push Button

- Power status LEDs.

- SRAM 18 Mbit

GATEWARE AND SOFTWARE

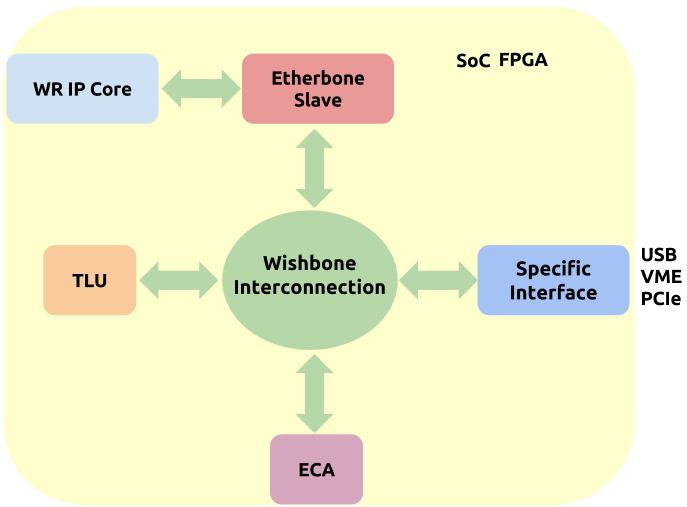

FAIR requires multiple form factor variants of timing receiver nodes. In order to reduce the maintenance effort, all of our different FPGAs utilize a common system on chip, figure below. This design is centered around the Wishbone bus system, which combines the standard timing receiver functionality with the form factor specific bus interfaces. The standard functionality included in every timing receiver consists of White Rabbit Core, an Event-Condition-Action (ECA) scheduler, and a timestamp latch unit (TLU). In this case the form factor is VME.

White Rabbit Core

The White Rabbit PTP Core is an Ethernet MAC implementation capable of providing precise timing. It can be used for sending and receiving regular Ethernet frames between user-defined HDL modules and a physical medium. It also implements the White Rabbit protocol to provide sub-nanosecond time synchronization.VME Host Bridge Core

The VETAR uses a vme-wishbone hdl core, legacy vme64x core. It conforms to the standards defined by ANSI/VITA VME and VME64.- “plug and play” capability; it means that in the vme core you can find a CR/CSR space whose base address is setted automatically with the geographical address lines and has not to be set by jumpers or switches on the board.

- The core supports SINGLE, BLT (D32), MBLT (D64) transfers in A16, A24, A32 and A64 address modes and D08 (OE), D16, D32, D64 data transfers.

- The core can be configured via the CR/CSR configuration space.

- ROACK type IRQ controller with one interrupt input and a programmable interrupt level and Status/ID register.

Drivers

In order to get acces to the VETAR over the VME bus you need the kernle module vmebus. The dirver configure and access a PCI-VME bridge, Tundra TSi148. It creates windows were the address/data access is predefined. It can create till 7.Etherbone Driver

Etherbone is an FPGA-core that connects Ethernet to internal on-chip wishbone buses permitting any core to talk to any other across Ethernet/PCIe/USB and VME. On top of the vmebus kernel module a etherbone can be loaded allowing Etherbone operations over VME bus,Software and Test

There is a collection of libraries (C and Python) that exports all the needed functionality of the VME bus to user space. This libraries allows to develop easily software that uses the VME bus for communication with the VETAR card. There is a Test Suit that checks if the driver and user space applications are working properly. (All the firmware described here applies to the MEN A20, no in the RIO III)SETUP

This section depict how to get up and runnig a VME Host System using the VETAR card using this tarball which all the BEL projects and the firmware needed:- Bitstream (gateware and software) for the VETAR cardF

- vmebus driver

- user space software

VME Platform

In order to make use of the Vetar card, you need to set up a typical VME host system. You need a VME create and a VME CPU controller. So far the CPU used with the VETAR are:- MEN A20

- RIO III

MEN A20

The VME Controller CPU runs a Debian Weezy 3.2 Kernel. It boots from a NFS server but it has also the possibility of booting from CompactFlash. It is not in the scope of this documentation to describe how to set up a NFS server and create a root file system, but it is quite streight forward. If you have problems with the default BIOS of the card, probably!!, update the firmware. Copy the update to a CompactFlash card:dd if=BIOS_update_MENA20 of=/dev/sdXPlug the CompactFlash in the slot, plug the VME CPU and power on, wait 120" and reboot. You're done (The file is too big for uploading it here... please send an email and I'll give the image c.prados@gsi.de)

RIO III

I don't support directly this CPU since it uses a particular Linux flaver and kernel. Problems with the BIOS, driver and user space software please contact J.Adamczewski-Musch@gsi.de To use the VETAR, you need to setup a VME host system and data master. Follow the directions to Configure a Data Master to setup a system which can control FECs over the network. The VETAR card is endowed with a a Altera FPGA. For programing/flashing it, please check the section Programming and Flashing and Altera deviec for more details.Programming/Flashing the VETAR

You have two options, programming the VETAR card using Quartus Tools and JTAG adapter, or programming the card using Etherbone toolsJTAG Programming

You need to have installed Quartus and decompress the [[bitstream][https://www-acc.gsi.de/wiki/pub/Timing/TimingSystemNodesReleaseR1VETAR2/vetar_bitstream.tar.gz] tarball.$(QUARTUS_BIN)/quartus_pgm -c BLASTER -m jtag -o 'p;vetar.sof'

ETHERBONE Programming

You need to have install Etherbone libraries and tools, more information about the project and how to install it and use it, e.g.:eb-flash <proto/host/port> <firmware>

VME Base Address, WB Base Address and WB Ctrl Base Address

The VETAR card has two Hex Switches: VN1 and VN2. Only is VN1 used so far. WIth this switch you can set the base address for the VME bus and Wishbone bus. The VME core, is a legacy core and also offeres the possiblitity to be used with VMEx64 cards and crates. For that reason the base address is mapped between the position of the VN1 and the VME bus, the position of the switch can be also seen as the slot where you should plug the card (it is a recommendation, no mandatory).

| Switch Position | VME Base Addres | WB Base Address | WB Ctrl Base Address |

| 1 | 0x80000 | 0x10000000 | 0x400 |

| 2 | 0x100000 | 0x20000000 | 0x800 |

| 3 | 0x180000 | 0x30000000 | 0xC000 |

| ... | ... | ... | ... |

Driver and User Space Software Deployment

Download the bel_projects tarballtar xvf bel_projects.tar.gz or git clone https://github.com/stefanrauch/bel_projects.git cd bel_projects git submodule init git submodule update git checkout vetar_v0.1 git submodule updatecompile the drivers

make etherbone make etherbone-install make driver make driver-installEverithing you need for using the VME bus will be compiled and installed.

Etherbone over VME

In order to load the needed driverscd bel_projects ./drv_loadFrom this moment on you can use either the raw vme access command or Ethernet commands

#eb-ls dev/wbm0 BusPath VendorID Product BaseAddress(Hex) Description 1 0000000000000651:eef0b198 0 WB4-Bridge-GSI 1.1 000000000000ce42:66cfeb52 0 WB4-BlockRAM 1.2 0000000000000651:eef0b198 20000 WB4-Bridge-GSI 1.2.1 000000000000ce42:ab28633a 20000 WR-Mini-NIC 1.2.2 000000000000ce42:650c2d4f 20100 WR-Endpoint 1.2.3 000000000000ce42:65158dc0 20200 WR-Soft-PLL 1.2.4 000000000000ce42:de0d8ced 20300 WR-PPS-Generator 1.2.5 000000000000ce42:ff07fc47 20400 WR-Periph-Syscon 1.2.6 000000000000ce42:e2d13d04 20500 WR-Periph-UART 1.2.7 000000000000ce42:779c5443 20600 WR-Periph-1Wire 1.2.8 0000000000000651:68202b22 20700 Etherbone-Config 2 0000000000000651:10051981 100000 GSI_TM_LATCH 3 0000000000000651:8752bf44 100800 ECA_UNIT:CONTROL 4 0000000000000651:8752bf45 100c00 ECA_UNIT:EVENTS_IN 5 0000000000000651:b77a5045 100d00 SERIAL-LCD-DISPLAY 6 0000000000000651:2d39fa8b 200000 GSI:BUILD_ID ROM 7 0000000000000651:5cf12a1c 4000000 SPI-FLASH-16M-MMAP 8 0000000000000651:9326aa75 100900 IRQ_VMEThe VME core provides both legacy VME and MSI interrupts. A way of testing the MSI interrupts would be: In the VME cpu we are going to snoop in the address range of the IRQ_VME 0x100900

#eb-snoop -v 8898 0x100900-0x1009ff dev/wbs0and from another interface we will write over this address range in order to trigger an MSI interrupt

#eb-write dev/ttyUSB0 0x100900/4 0xa #eb-write dev/ttyUSB0 0x100900/4 0xab #eb-write dev/ttyUSB0 0x100900/4 0xabc #eb-write dev/ttyUSB0 0x100900/4 0xabd

#eb-snoop -v 8898 0x100900-0x1009ff dev/wbs0 Received write to address 0x100900 of 32 bits: 0xa Received write to address 0x100900 of 32 bits: 0xab Received write to address 0x100900 of 32 bits: 0xabc Received write to address 0x100900 of 32 bits: 0xabd

VME DRIVER INFORMATION

Driver information is essential for monitoring the correct functionality of the VME driver/gateware. The vmebus driver provided a lot of information using proc file system. General information:# cat /proc/vme/info PCI-VME driver v1.4 (May, 19 2010) chip revision: 00000001 chip IRQ: 17 VME slot: 0 VME SysCon: Yes chip registers at: 0xf8222000Information about the windows created, mapping and size

cat /proc/vme/windows

PCI-VME Windows

===============

va PCI VME Size Address Modifier Data Prefetch

and Description Width Size

----------------------------------------------------------------------------

Window 0: Active - 1 user

f9b00000 81010000 00080000 00080000 - (0x2f)CR/CSR / D32 NOPF

Mappings:

0: f9b00000 81010000 00080000 00080000 - (0x2f)CR/CSR / D32 NOPF

client: kernel

Window 1: Active - 1 user

f9c00000 81090000 10000000 01000000 - (0x08)A32MBLT USER / D32 NOPF

Mappings:

0: f9c00000 81090000 10000000 01000000 - (0x08)A32MBLT USER / D32 NOPF

client: kernel

Window 2: Active - 1 user

fac80000 82090000 00000000 01000000 - (0x38)A24MBLT USER / D32 NOPF

Mappings:

0: fac80400 82090400 00000400 000000a0 - (0x38)A24MBLT USER / D32 NOPF

client: kernel

Window 3: Active - No users

fbd00000 83090000 00100000 00080000 - (0x2f)CR/CSR / D32 NOPF

Window 4: Active - No users

fbe00000 83110000 00200000 00100000 - (0x08)A32MBLT USER / D32 NOPF

Window 5: Not Active

Window 6: Not Active

Window 7: Active - 1 user

f8a80000 80010000 00000000 01000000 - (0x39)A24 USER DATA / D32 NOPF

Mappings:

0: f9a7f000 8100f000 00fff000 00001000 - (0x39)A24 USER DATA / D32 NOPF

client: kernel

and IRQ and how is the handler:

# cat /proc/vme/irq Vector Count Client ------------------------------------------------ 1 0 wb_irq

# cat /proc/vme/interrupts Source Count -------------------------- DMA0 0 DMA1 0 MB0 0 MB1 0 MB2 0 MB3 0 LM0 0 LM1 0 LM2 0 LM3 0 IRQ1 0 IRQ2 0 IRQ3 0 IRQ4 0 IRQ5 0 IRQ6 0 IRQ7 0 PERR 0 VERR 1 SPURIOUS 0

Testing FESA

Testing the FESA class and ECA unit. Log into the VME CPUssh root@kp1cx02and execute

/opt/fesa/local/scripts/start_BeamProcessDU_M.sh -f "-usrArgs -WR"in other terminal we'll stimulate it

eca-ctl dev/wbm0 send 0x007b004000000000 0x12343456 0xabd +0.1you should see sth like

cycleName WhiteRabbit.USER.47.11.0 TRACE: src/BeamProcessClass/RealTime/MyRTAction.cpp: execute: Line 51 MyRTAction::execute start formatId: 0 groupId: 123 eventNumber: 4 sequenceId: 0 beamProcessId: 0 sequenceCounter: 0 Event: Timing::WhiteRabbit-Event-04#4 Value Setting-Field: 0 MyRTAction::execute stop

Testing

In the folder /bel_projects/ip_cores/legacy-vme64x-core/drv there are the following subfolders with a wide test suit:- drvrtest

- test

- pytest (python tests)

- test_vetar

#vme_cs_csr_cfg 1 **************************************** *CONFIGURATION VME VETAR CARD IN SLOT 1* **************************************** Vendor ID: 80031 Wisbone bus base address: 10000000 Wisbone Ctrl bus base address: 400 WB interface: 32 bits IRQ Vector: 1 IRQ Level: 7 Error Flag: Not asserted WB Bus: EnabledWhere it is shown the configuration of the CS/CSR of the VME core, it is IMPORTANT to be SURE that you have configured correctly the VME interface and don't blame (as usual) the developer for a bug!!!

I/O CONFIGURATION

- Release Compatibility: R1

- Date:

- Hardware:

- VETAR2 carrier board

- VETAR1DB2 mezzanine board

- I/O mezzanin

- TTLIN1 : N/A

- TTLIN2 : N/A

- TTLOUT1 : ECA channel0 -> eca_gpio0

- TTLOUT2 : ECA channel1 -> eca_gpio1

- TTLOUT3 : ECA channel2 -> eca_gpio2

- TTLOUT4 : ECA channel3 -> eca_gpio3

- TTLOUT5 : ECA channel4 -> eca_gpio4

- TTLOUT6 : ECA channel5 -> eca_gpio5

- LVDS1 : ECA channel0 -> eca_gpio0

- LVDS2 : ECA channel1 -> eca_gpio1

- LVDS3 : N/A

- LVDS4 : N/A

- LVDS5 : N/A

- TRIGGER : N/A

- HMDI:

- HDMI_OUT_0 : pps

- HDMI_OUT_1 : ref clk

- HDMI_OUT_2 : N/A

- HDMI_IN_1 : N/A

- I/O carrier

- LM1_O : pps

- LM2_I : TLU trigger0

- Gateware and Firmware

- TLU

- triggers/fifos: 1

- fifo depth: 10

- ECA

- channels: 2

- condition table size: 7

- action queue length: 8

- Images

- vetar.tar.gz

- TLU

- Documentation

- top file

- vetar_bitstream.tar.gz: vetar_bitstream.tar.gz

- bel_projects.tar.gz: bel_projects.tar.gz

| I | Attachment | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|

| |

bel_projects.tar.gz | manage | 2 MB | 15 Jan 2014 - 17:18 | UnknownUser | |

| |

icaleps_soc.jpg | manage | 21 K | 06 Jan 2014 - 11:16 | UnknownUser | |

| |

vetar2.jpg | manage | 186 K | 01 Nov 2013 - 15:20 | DietrichBeck | ddd |

| |

vetar_bitstream.tar.gz | manage | 2 MB | 15 Jan 2014 - 17:16 | UnknownUser |

Edit | Attach | Print version | History: r22 < r21 < r20 < r19 | Backlinks | View wiki text | Edit wiki text | More topic actions

Topic revision: r22 - 06 Nov 2014, DietrichBeck

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding Foswiki? Send feedback